Homeworks

There will be 2 problem set per week. Each problem set has the programming language that you must use for the assignment, the due date, and a purpose.

Homeworks that are due on Thursday are "finger" exercises, intended to give you additional practice of the current material (to "keep your fingers moving") and will be automatically graded. They will be done solo, and will cover what was learned that week (possibly up to and including Thursday’s lecture). Homeworks that are due on Tuesday will be more involved, will be done with a partner, and will be graded by some combination of automatic grading and manual, line-by-line written feedback. (Not every assignment will be autograded, but many will.)

Autograding Feedback

It may take a few seconds to a few minutes for the feedback to arrive. Be patient! And see details below.

Style: First and most simply, Handins will double-check that your code

is stylistically readble. (See The Style if you’re unsure what this

means.) Readable code is much easier to, well, read —

Substance: Second, there are many ways for programs to be wrong: the implementation may be incorrect relative to the problem, the test cases may be incorrect relative to the implementation, or your understanding of the problem itself might be wrong. Part of the goal of automated testing is to help you narrow down which of these possibilities it might be.

To evaluate your implementation, we run it both against your own tests and against our tests. Your implementation should pass all of these tests. If it fails one of our tests, then you should debug your implementation. If it passes all of our tests but fails one of yours, then you should still reexamine your implementation, but you should also reexamine your test suite, since the test itself might be buggy.

To evaluate your test suite, we run it both against your own implementation and against our implementations. Based on "Executable Examples for Programming Problem Comprehension" by Wrenn and Krishnamurthi. Our implementations include a correct one, called the "wheat," and a handful of (purposefully!) buggy implementations, called the "chaffs." The goal of your test suite is to distinguish the wheat from the chaffs, so the wheat should pass your test suite, whereas every chaff should fail your test suite (i.e., it should fail on at least one of your tests). If the wheat fails on your test suite, then you should debug your test suite. If a chaff passes your test suite, then you should write more tests.

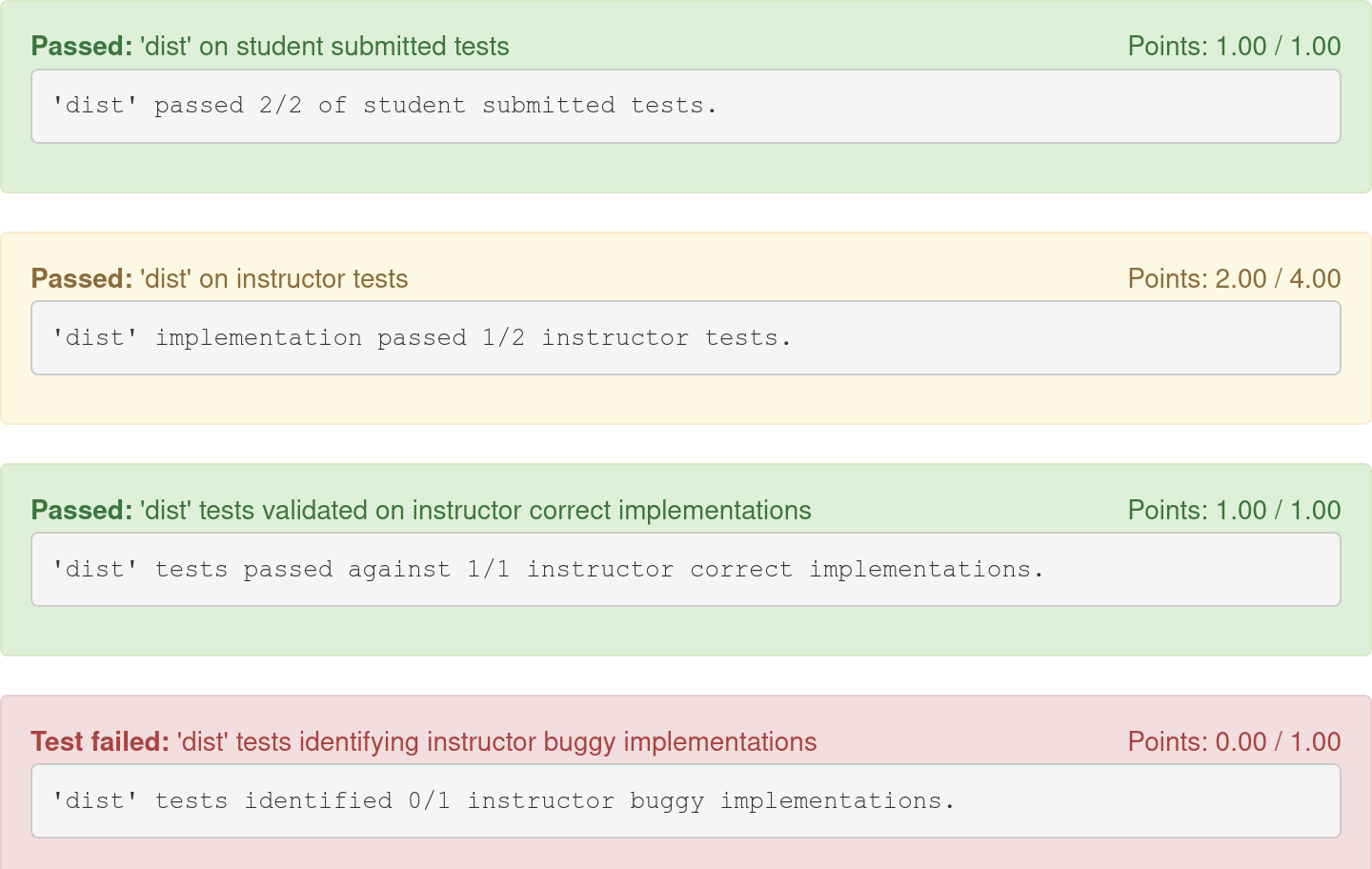

For example, consider the following output, which (again) you can see by clicking on the Grader output link:

Student Implementation / Student Tests. The student implementation passed both of the student tests, so the student implementation is consistent with the student tests.

Student Implementation / Instructor Tests. The student implementaion fails one of the instructor tests, so the student implementation is definitely buggy. Given the previous information, we can’t be sure whether the test suite is also buggy.

Instructor Wheat Implementation / Student Tests. The wheat passes the student test suite, so the student test suite is not buggy. Given the previous information, we can conclude decisively that the failure in (2) is caused by the implementation, not the test...but the student test suite is insufficiently detailed to have caught the error.

Instructor Chaff Implementations / Student Tests. Every chaff fails the student test suite, so the test suite covers a reasonable baseline set of behaviors. However, this does not mean that the suite is complet —

in fact, in this example we know that it is indeed incomplete.

It’s important to note that passing all of the autograder tests does not mean that your implementation and test suite are completely correct. All it does is provide you with preliminary feedback so you can be sure you’re on the right track. For example, your implementation may pass our test suite but still have a bug. Alternatively, your test suite might catch all of our chaffs but miss some serious bugs.

Resubmitting

Note: This delay is independent of whether your homework is late: if a submission is received before the deadline but the autograding is not complete by the deadline, the submission still counts as “on time.”

A Complete Guide to the Handin Server