Due Monday Mar 24 Due Wednesday Mar 26, 11pm

Worth: 9% of your final grade

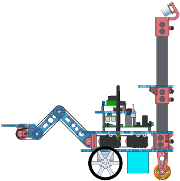

In the first part of this lab you’ll assemble the mast and camera on your robot.

In the second part of the lab you’ll write high level code to capture images and process them to find an object with a known color.

In the third part you will use image-based visual servoing to drive the robot to the detected object location and grasp it.

- Mast

- Get the mast/camera assembly with your group number.

- Insert the mast into the bracket at the rear of the robot with the camera facing forward.

- Attach the camera to a USB port on the HLP.

- Stow the extra USB cable inside the mast.

- Aim the camera down and gently tighten the thumbscrews.

Follow similar instructions as for lab 2 to update your svn checkout and copy the lab 4 framework into robotics/gN/l4.

On the Pandaboard, go to robotics/ohmm-sw/hlp/cvutils and run make to build some C++ OpenCV utilities that can be helpful when working with the camera. Then in robotics/ohmm-sw/hlp/yavta run make to build yavta, which can be used to control parameters of the camera from the command line.

Then rebuild and flash the LLP monitor code using the same procedure as for lab 2.

Finally, rebuild the HLP OHMM jarfile using the same procedure as for lab 2.

Establish an SSH connection to your HLP. Do not enable X11 forwarding (i.e. do not include the -X switch to ssh). Change to your robotics/gN/l4 directory and run

> make

> ./run-class CvDemo

Then on another machine (or the one from which you made the ssh connection) open a web browser and go to the URL http://IP:8080/server.html. You should see a 320x240 camera image updating at about 2 FPS.

If this does not work you can debug to at least check if the camera is working. If you do temporarily enable X11 forwarding, i.e.

> ssh -X -C

(please use the -C option to enable compression, which reduces network congestion), and you are ssh-ing from a machine that is running its own X server (including Linux, OS X if you install the free XQuartz server, or Windows if you install an X server, then a window should pop up with the image.

By default the camera is in an auto exposure mode where it attempts to automatically set the frame exposure time based on the average brightness in the scene. In many cases this seems to cause the image of the pink “lollipop” grasp ojbect to appear washed out or with a large white specular highlight, causing problems with color detection.

OpenCV does have some API calls for adjusting camera parameters, but they may not work correctly. YMMV. We provide the yavta program as another means to set camera parameters from the Linux command line. Once the parameters have been set they should remain “sticky” until the camera is unplugged or the HLP is rebooted. Running yavta is not guaranteed to work while another program (like your code) is accessing the camera. Run yavta first to adjust the exposure, then run your code.

Once you have compiled yavta as described above, you can set the exposure like this (on the Pandaboard):

> cd robotics/ohmm-sw/hlp/yavta

> ./set-exposure 1000

That would set the camera to manual exposure 1000. Valid exposure numbers are in the range 1 to 10000, with lower numbers giving longer exposure. 1000 seems to be a reasonable starting point; it will give rather dim images in some indoor cases but also usually not be overexposed in bright areas such as near windows. You can also return the camera to auto exposure with

> cd robotics/ohmm-sw/hlp/yavta

> ./set-exposure auto

Write a program that runs on the HLP and processes incoming frames for the camera. We recommend you start at a fairly low resolution, say 320x240, and aim for about 10 frames per second. We have provided a Java base class ohmm.CvBase that we strongly recommend you use.

You must have a way to display the incoming frames on a remote machine for debugging purposes. This debug view does not have to have the same resolution and frame rate as your actual processing. As the resolution and frame rate are increased the required bandwidth to transmit the images will also increase. For similar reasons, we also strongly recommend that you use some form of image compression. The provided ohmm.CvBase class uses an ohmm.ImageServer that takes care of this requirement for you by starting a web server on the robot. The CvDemo program used for testing is just an example of its use.

Adapt your program so that it can respond to mouse clicks and keypresses in the debug window. Whenever the mouse is clicked have it display (either in the remote debug graphics or simply on the HLP text console from which you launched the program) the image coordinates of the mouse pointer at the time of the click. Similarly, whenever an alphanumeric key is pressed over the debug image print out a message indicating which key was pressed. These requirements are also already implemented for you if you use CvBase or ImageServer, but make sure you know how to use them.

Develop a blob tracker that begins when the user clicks on a blob. Use that click to set the target color. You may want to average the pixel colors near the mouse click. Track the blob as it moves around in the image, either by moving the object or the camera. You must show a small “+” drawn over the live debugging image that indicates the center of the tracked blob. You may assume that if there is more than one blob matching the target color that you are to track the largest one, or the one that is closest to the previously tracked blob at each frame.

We strongly suggest using OpenCV, possibly via the JavaCV bindings. The provided ohmm.CvBase code ueses these libraries. The current version of OpenCV should work, however, we are using a slightly modified (and old) version of JavaCV. These libraries should already be configured correctly on the HLP and on other Linux environments provided with the course (unfortunately not including the standard CCIS Linux environment). If you want to set up your own machine, just OpenCV from the normal distribution, but use javacv-*.jar and javacpp.jar that we provide in robotics/ohmm-sw/ext/lib/java.

Use your object detection program to develop a closed-loop controller that rotates the robot in place to make it face the object. This pre-supposes that the object was visible somewhere in the image to start with; for this lab, you will just place the provided object somewhere in the camera’s field of view. Your code should work no matter where the object is in relation to the robot, as long as it is initially visible.

We strongly suggest that you Begin with a calibration phase where you place the object in the robot’s gripper as it should appear after a successful grip using the same motions as in the grasping part of lab 3. Click on the image of the object in this calibration pose, both to set the target color and to set the target image location for the blob.

Note: A solution for lab 3 is now available at ohmm-sw-site/hlp/ohmm/Grasp.java. Please do not copy/paste/modify this code. Instead, just create a Grasp object (or subclass) and use it in your code.

Next develop a controller (e.g. bang-bang or proportional) to drive in-place rotation that uses the horizontal difference in image pixels between the current blob location and the target location set by the calibration click. Do not engage the visual servo controller (i.e. do not start moving the robot) until the user hits the r key (run). This will allow you to take the object out of the robot’s gripper after the calibration phase and move it to another place (but still in the camera field of view). When the user hits r to begin motion, you should first stow the arm (e.g. in home pose) to keep it out of the way until you are ready to grip the object again.

You may choose from any of the available in-place rotation methods, such as driveSetVW(), driveOrientationServo(), driveTurn(), etc. You may find that it is easiest to develop a controller in the usual way if you simply command the rotate-in-place speed

. Hint: don’t try to move too quickly. Keep the arm stowed so that it is mostly out of the way in the images.

. Hint: don’t try to move too quickly. Keep the arm stowed so that it is mostly out of the way in the images.

Develop a similar controller that drives the robot forward or backward radially toward/away from the object based on the vertical difference in image pixels between the current blob location and the target location set by the calibration click. Have this controller automatically engage once the turn-in-place controller is within a tolerance of the goal orientation. The idea is to drive the robot to an offset spot where the arm can successfully reach out and grab the object, the same as in the grasping part of lab 3.

Detect when the object is close enough to the goal location in the image to stop robot motion and engage the arm. Open the gripper, use your arm IK to lower and then extend, then close the gripper. Use the same motions as in the grasping part of lab 3.

Return “home” with the object, i.e. return the robot to the global

pose where it was when you hit

pose where it was when you hit r. For example, if your program initializes

then this is equivalent to returning home. Also make sure to finish motion with the arm in its kinematic home pose.

then this is equivalent to returning home. Also make sure to finish motion with the arm in its kinematic home pose.

- Optional extra credit:

- Detect when the object is not visible in the camera. Either stop and display an error or engage a search behavior to re-acquire the object.

- Verify that the grip succeeded, for example, return the arm to home pose after attempting to pick up the object and verify that the object appears in the image to move as it should if it is actually held in the gripper.

You will be asked to demonstrate your code for the course staff in lab on the due date for this assignment (listed at the top of this page); 30% of your grade for the lab will be based on the observed behavior. Mainly want to see that your code works and is as bug-free as possible.

The remaining 70% of your grade will be based on your code, which you will hand in following the general handin instructions by the due date and time listed at the top of this page. We will consider the code completeness, lack of bugs, architecture and organization, documentation, syntactic style, and efficiency, in that order of priority. You must also clearly document, both in your README and in code comments, the contributions of each group member.

![]() . Hint: don’t try to move too quickly. Keep the arm stowed so that it is mostly out of the way in the images.

. Hint: don’t try to move too quickly. Keep the arm stowed so that it is mostly out of the way in the images.![]() pose where it was when you hit

pose where it was when you hit ![]() then this is equivalent to returning home. Also make sure to finish motion with the arm in its kinematic home pose.

then this is equivalent to returning home. Also make sure to finish motion with the arm in its kinematic home pose.