CS5350: Assignment 2

DUE: 11:59pm, Tuesday 2/28

Worth: 10% of your final grade

The course policies page outlines academic honesty as applied to this course, including policies for working with other students on assignments. It also gives the rules for on-time homework submission.

- get hands-on experience with OpenCV

- work with live video from a camera and/or video from a file

- calibrate a camera

- create your first OpenCV program that draws graphics over a live video image

There are three problems. Grading and turn-in details are given below.

Note: you will likely want to use the same installation of OpenCV, and the same camera, for the next assignment (HW3).

In this problem you will install and test OpenCV. OpenCV is a popular free open-source library for a variety of computer vision tasks. It has a focus on providing optimized implementations of real-time algorithms (i.e. algorithms intended to run on every frame of a live video stream coming in at 10 or more frames per second), but it also includes some off-line algorithms. It is under very active development under direction of its founders Gary Bradsky and others, many of whom are now employed by Willow Garage.

OpenCV is developed primarily in C/C++, but bindings also exist that allow you to use it from a variety of other programming environments including Java, Python, and others (more below). OpenCV is evolving quickly; at the time of this writing the most recent stable release is version 2.3.1. In principle it is relatively portable across processor architectures and operating systems. In practice, it contains many optimized algorithms that will only be enabled on Intel processors. It works well on most current GNU/Linux, Macintosh OS X, and Microsoft Windows distributions.

For this assignment we will focus on supporting OpenCV on current Intel-based Windows, GNU/Linux, and OS X systems, with application programming in C, C++, or Java.

- Familiarize yourself with the OpenCV documentation, including the official wiki, the official API documentation (covers the C, C++, and Python interfaces), and the book Learning OpenCV. All the example code we provide below is based on the C API, even the C++ and Java examples; the latter uses the JavaCV library. (You may also be interested in the OpenCV bug tracker.)

- Decide on (i) one or more computers and (ii) one or more cameras you will use for the rest of this homework. A large number of common USB webcams work with OpenCV, however, the actual compatibility depends on the combination of camera and host platform you intend to use. If in doubt, or if you simply do not have any camera available, talk with the course staff. We will be able to lend out a limited number of cameras for this assignment. Also, if you work with the course staff and we are unable to get a camera to work for you, you may complete the assignment using the provided video files only.

- Install OpenCV on the computer(s) you identified in 1.b. See below for details.

- Test your OpenCV installation, and its compatibility with your camera, by running the C++

cvdemo program we provide below. If it doesn’t work, (i) contact the course staff and (ii) go back to step 1.b. It is essential to make sure early on that you have a stable way to read input image data into OpenCV. This is one of the most common challenges when starting to use OpenCV; both the camera and video file input drivers can have issues on different systems. - Decide on the programming environment you want to use to complete problems 2 and 3 of the homework assignment. There are a variety of possible choices, but we will mainly focus on supporting C, C++, and Java. Install any necessary language binding libraries (more info below), and test everything either by running one of the language-specific demo programs we provide below or by developing your own, if necessary. You must be able to capture at least 10 frames per second (in

cvdemo hit g with input focus on the video window to get debug output for that frame including FPS) at a minimum of  (or, if you worked with the course staff and were not able to get a camera running, you must be able to capture frames from the provided video files) and display them on screen. If you develop your own test program for this step, please include it with your hand-in.

(or, if you worked with the course staff and were not able to get a camera running, you must be able to capture frames from the provided video files) and display them on screen. If you develop your own test program for this step, please include it with your hand-in.

In this problem you will calibrate your camera. Print out this chessboard calibration target on a standard 8.5 by 11 inch sheet of paper. This chessboard has 10 corners horizontally and 7 vertically (these counts do not include the outermost corners, nor should they). The pitch (side length of each square) is approximately 1 inch (*).

(*) The actual pitch will depend on whether the method you use to physically print it involves any scaling, as is common. In fact the precise value of the pitch does not matter too much. Many routines simply define the pitch as “1 physical unit”, and all the resulting data is in those units. For example, this assumption is made by the calibration program unless you use the “-s” flag. For our chessboard, this assumption is just fine and will give data in physical units of approximately one inch.

Acquire 10 clear images of the chessboard from a variety of viewpoints (e.g. using cvdemo or the equivalent in your programming environment). In each image the entire chessboard must be visible, and should take up at least about 1/3 of the frame. Be sure the chessboard remains planar, either by placing it flat on a table or by pasting it to a flat board.

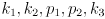

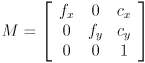

Use the calibration program (which comes as a sample with OpenCV, a copy is provided below) to process those images and generate a calibration file in YAML format (similar in concept to XML, but fewer < and >). This calibration file is readable both by human and machine. The important values are the coordinates  of the principal point (the actual intersection of the optical axis of the lens system with the pixel array), the horizontal and vertical focal lengths

of the principal point (the actual intersection of the optical axis of the lens system with the pixel array), the horizontal and vertical focal lengths  , and the distortion parameters

, and the distortion parameters  .

.

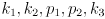

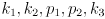

are the camera intrinsics and are included in the YAML file as the

are the camera intrinsics and are included in the YAML file as the camera_matrix. This is actually presented as the 9 data values from the  matrix

matrix

reading left to right across the top row, then across the middle row, and finally across the bottom row. (Note: many common cameras actually have square pixels, so it should not be surprising for  and

and  to be approximately equal. If one is within about 10% of the other, it’s safe to assume they are actually the same for your camera, and you may get better results by re-running

to be approximately equal. If one is within about 10% of the other, it’s safe to assume they are actually the same for your camera, and you may get better results by re-running calibration with the flag “-a 1”.)

are the radial and tangential distortion model coefficients and are included as the

are the radial and tangential distortion model coefficients and are included as the distortion_coefficients matrix in the YAML file.

In the README for your hand-in, state (i) the make and model of the camera you’re using, (i) its horizontal and vertical resolution in pixels, and (iii) the values you got for  , and the distortion parameters

, and the distortion parameters  . Please give each value in scientific notation with four digits of precision after the decimal point.

. Please give each value in scientific notation with four digits of precision after the decimal point.

Now you’ll do some experiments to check whether the calibration data you got is believable. The four (or three, if you set  ) camera intrinsics are all measured in pixels. Given the horizontal and vertical dimensions of your camera images, what value of the point

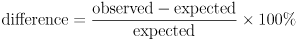

) camera intrinsics are all measured in pixels. Given the horizontal and vertical dimensions of your camera images, what value of the point  would you expect for an ideally constructed camera? By what percent do your measured values for these two quantities differ from the expected values? Use the formula

would you expect for an ideally constructed camera? By what percent do your measured values for these two quantities differ from the expected values? Use the formula  . Put the answer in your README.

. Put the answer in your README.

To check the focal length(s) you will calculate the horizontal and vertical fields of view (FoV) of your camera. Given that you have the distance from the pixel grid to the center of projection measured in pixels ( resp.

resp.  ), calculate the horizontal and vertical FoV of your camera in degrees, as a function

), calculate the horizontal and vertical FoV of your camera in degrees, as a function  ,

,  ,

,  , and

, and  , where the latter two are the horizontal and vertical resolution of the camera in pixels (for these calculations assume that

, where the latter two are the horizontal and vertical resolution of the camera in pixels (for these calculations assume that  and

and  have the values you would expect if the camera was ideally constructed). You will need to use some basic trigonometry to calculate the FoVs from the pixel measurements. Reasonable values for horizontal FoV for most common cameras should come out somewhere in the range of

have the values you would expect if the camera was ideally constructed). You will need to use some basic trigonometry to calculate the FoVs from the pixel measurements. Reasonable values for horizontal FoV for most common cameras should come out somewhere in the range of  —

— ; vertical FoV is typically somewhat smaller because there are typically fewer vertical pixels than horizontal, and pixels are typically square.

; vertical FoV is typically somewhat smaller because there are typically fewer vertical pixels than horizontal, and pixels are typically square.

Now you will make an independent physical measurement of the FoVs. You will need (a) either a measuring tape at least about 3m or 10ft long or a piece of string and a ruler, and (b) some way to place marks on a wall, such as with removable tape (or use a blackboard or whiteboard). Talk to the course staff if you cannot get the needed materials. Place two marks on the wall separated by a measured horizontal distance  of about 2m (or 6ft). Using

of about 2m (or 6ft). Using cvdemo, hold your camera so that it can see both marks and then back away from the wall until the marks are at the absolute left and right sides of the image. Measure the perpendicular distance  from the camera to the wall. Then turn the camera

from the camera to the wall. Then turn the camera  and repeat the experiment, using the same

and repeat the experiment, using the same  , but now record the distance

, but now record the distance  to the wall. Use

to the wall. Use  ,

,  , and

, and  to calculate the actual horizontal and vertical FoV of the camera, again invoking the necessary trigonometry. Call these the “expected” values, and calculate the percent error relative to the values “observed” from the camera calibration above.

to calculate the actual horizontal and vertical FoV of the camera, again invoking the necessary trigonometry. Call these the “expected” values, and calculate the percent error relative to the values “observed” from the camera calibration above.

Put all your FoV calculations and the numeric results in your README.

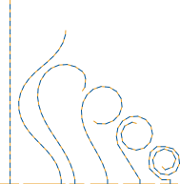

The distortion parameters are a bit harder to understand intuitively. First, for most common webcams, they should be fairly low in magnitude, i.e., the absolute value of each will typically be less than one, and often less than 0.1. Second, you can qualitatively check that applying them to undistort the actual camera images results in straight lines looking straighter. If your camera did not have much distortion to start with then the effect may not be that impressive, but it’s useful at least to verify that it’s at least not worse than what you started with. Use the cvundistort program provided below to test undistortion. Aim the camera at a known-straight object in the scene. You may toggle undistortion on/off by hitting u. With undistortion turned on, pause the input by hitting the spacebar. Then save both the raw capture image and the undistorted image by hitting c and p, respectively. Include these images with your hand-in.

In this problem you will create your first OpenCV program.

Using either the provided cvdemo example code or your own code, get a baseline program running that connects to a camera and displays the live video on-screen. Aim for a resolution of  and a speed of at least 10 frames per second. (In

and a speed of at least 10 frames per second. (In cvdemo hit g with keyboard focus on the video window to get debug output for that frame including FPS.)

Modify the program to draw over the live video. For this assignment, the drawings do not have to relate at all to the content of the video. It just has to look like you are drawing on a “glass sheet” that happens to have a video playing underneath it. In particular, you must at least use the OpenCV API functions cvLine() and cvEllipse(), and you must draw at least one object each in red, green, and blue.

The official instructions are generally to compile OpenCV from source, with the exception that official precompiled binaries are provided for Windows.

For this assignment, please try to install the latest release version of OpenCV. Official download files are provided for Windows and for Unix (including Mac OS X).

Again, with the exception of Windows users, the official downloads are source-only and you will need to compile them. It is often much faster, and sometimes less error prone, to install pre-compiled binaries than to rebuild a project from its source code. Your Mileage May Vary, but we have found the following binary downloads to be useful.

Windows users can use the official Windows OpenCV download. We downloaded OpenCV-*-win-superpack.exe and extracted it to c:\. This produced the directory c:\opencv containing the OpenCV source and the subdirectory c:\opencv\build containing binary builds for several different compilers.

If you plan to code in C/C++ on Windows you will need to install MSVC or the MinGW port of GCC. In general MSVC is not free, though CCIS students at NEU can get a license. Microsoft does offer a “free evaluation” version of Visual Studio 2010 (aka Visual Studio 10) called Visual C++ 2010 Express.

To use MinGW we downloaded the mingw-get-inst*.exe installer and selected both the C and C++ compilers and also the MSYS environment, which provides a basic bash shell and a few key tools like make. Then we added

c:\opencv\build\x86\mingw\bin

to the PATH environment variable (in XP or Windows 7 you can do this at “control panel->system->advanced->environment variables->PATH->edit”). This is done for your convenience in the provided setup-mingw.sh, which should be sourced like this in the MinGW shell:

. setup-mingw.sh

Then you can use the provided makefiles as usual.

Unfortunately there seems to be an open bug with the MinGW OpenCV precompiled libraries that causes programs to segfault in the image display code. One option is to recompile the libraries with SSE disabled. We did this; replace the .dlls in c:\opencv\build\x86\mingw\bin with the contents of this zip. Also, it may be possible to use either version of the MinGW precompiled libraries as long as an alternate codepath is used for display, which includes using the MinGW OpenCV precompiled libraries with JavaCV code.

To use MSVC we installed MSVC++ 2010 Express. Then we added

c:\opencv\build\x86\vc10\bin

c:\opencv\build\common\tbb\ia32\vc10

to the PATH environment variable (see similar details above for MinGW). This is done for your convenience in the provided setup-msvc.sh, which should be sourced like this in the MinGW shell:

. setup-msvc.sh

Yes, you can use the MinGW shell but the MSVC compiler. Also see below for details on how to use the provided makefiles with MSVC.

If you plan to code in Java on Windows, then you do not need the C/C++ compiler, but you will still need its run-time .dlls. You will also need these .dlls just to run the pre-compiled MSVC and MinGW executables for the demo code below.

For MinGW the necessary runtime .dlls are installed when you install MinGW. You may need to invoke the programs from within the MinGW MSYS shell. Make sure the MinGW OpenCV .dlls are on your path as described above.

For MSVC, install the appropriate MSVC 10 runtime for x86, x64, or ia64 (the last is unlikely unless you are running on some kind of server-class machine). Finally, make sure the MSVC 10 OpenCV .dlls are on your path as described above.

There are many Linux distributions, each of which potentially require different binary packages. We mainly use Ubuntu, for which the currently recommended versions are 10.04 (lucid, current long term support release) and 11.10 (oneiric, latest release). If you are considering making a fresh install of Ubuntu, we currently recommend 11.10. (Unless you will use it in a virtual machine under VMware Fusion on an OS X host, where we currently recommend 10.04 because of several bugs with later versions.)

The official Ubuntu repositories for all current Ubuntu versions do contain OpenCV packages, though the package names and the available versions depend on the version of Ubuntu you are running. Instead, we recommend using the OpenCV packages provided by the ROS (Robot Operating System) project. Note that you should not need to install all of ROS, which is typically several GB, but only the OpenCV components. This should work on all current Ubuntu versions:

ROS_REPO="deb http://packages.ros.org/ros/ubuntu `lsb_release -cs` main"

sudo sh -c "echo \"$ROS_REPO\" > /etc/apt/sources.list.d/ros-latest.list"

wget http://packages.ros.org/ros.key -O - | sudo apt-key add -

sudo apt-get update

sudo apt-get install libopencv2.3 libopencv2.3-dev libopencv2.3-bin

We are not aware of any precompiled binaries of the newest OpenCV version for OS X. Please see the instructions to compile from source below.

If you were unable to get OpenCV working with the binary downloads above, your next option is to build the OpenCV libraries from source. You will need cmake whether you plan to use MinGW or MSVC.

1. unpack OpenCV-*-win-superpack.exe to the directory c:\opencv as above

2. create a subdirectory opencv\build\local

3. run cmake-gui (e.g. from the Start menu)

4. Browse Source... to c:\opencv

To use MinGW

5. Browse Build... to c:\opencv\build\local\mingw (create if needed)

6. Configure twice

7. select MinGW Makefiles, accept other defaults

8. wait until you get a window full of red highlight

9. accept default configuration but disable all SSE options

10. Configure again, the red highlight should disappear

11. Generate

12. close cmake-gui

13. navigate to c:\opencv\build\local\mingw in a MinGW terminal

14. run mingw32-make

To use MSVC

5. Browse Build... to c:\opencv\build\local\msvc (create if needed)

6. Configure

7. select Visual Studio 10, accept other defaults

8. wait until you get a window full of red highlight

9. accept all default build options but enable BUILD_EXAMPLES

10. Configure

11. Generate

12. close cmake-gui

13. open project c:\opencv\build\local\ALL_BUILD.vxcproj in MSVC

14. wait for MSVC to parse every file related to the project

15. right click on ALL_BUILD, select "Build"

For Linux, the official specific instructions to build OpenCV from source are here, but for Debian and Ubuntu there are also more specific instructions.

For OS X, the official specific instructions to build OpenCV from source are here. You will need Apple’s free development environment xcode. One option that worked for us is to use the macports system:

1. install xcode

2. install macports

3. sudo port selfupdate

4. sudo port install opencv

5. sudo port install pkgconfig

OpenCV can be used from a variety of programming languages in addition to C/C++. It ships with bindings for python, and several different bindings to Java are available as separate projects; the most complete seems to be JavaCV.

We are focusing on support for C, C++, and Java. If you will be using C or C++, there is nothing you need to do other than to install the OpenCV libraries and header files (which you did above) and to get your compiler and linker working with them.

If you are using Java,

Read the documentation for JavaCV.

Download the latest JavaCV binaries and unzip them.

Compile your code with the javacv.jar and javacpp.jar in the CLASSPATH. The makefile provided with the Java examples can help with this; on Unix, OS X, or Windows in an MinGW shell, just do

make CvDemo.class

`make -s java-cmd` CvDemo ARGS

where ARGS are the command line arguments (if any) that you want to pass to CvDemo. The directory containing the JavaCV jars defaults to the current directory, but this can be overridden by setting the environment variable JCV_DIR. For example, on Windows we made the directory

c:\opencv\javacv

and we unpacked the latest JavaCV javacv-bin-*.zip there. Then (in a MinGW shell) we set the environment variable

export JCV_DIR=c:\opencv\javacv\javacv-bin

and appended c:\opencv\build\x86\mingw\bin to the PATH environment variable.

We have prepared and tested a variety of sample programs. Some we have written from scratch, others are provided with OpenCV. These should be useful to you in three ways:

You can study how we wrote them to help you understand how to structure your own code.

You can use code from them directly in your own projects. In particular, if you are coding in C++ or Java then the corresponding implementation of CvBase should be useful as a base base class for the program you need to develop for problem 3 (and also for the next assignment, HW3). The C module cvbase should be similarly useful if you are coding in C.

For problems 1 and 2 you will need to capture images, calibrate your camera, and perform undistortion. The cvdemo and calibration programs (or some equivalent) will be needed for those tasks.

First, Pre-built binaries of the example code are provided for some platforms. Note that these are generally dynamically linked against the OpenCV libraries (.dlls on Windows), so you must have correctly installed OpenCV for them to work. Links to the corresponding sourcecode are given below.

However, it’s instructive to know how to recompile the examples from the provided source code. You could also use the same approach to compile your own code, of course. We provide makefiles (C++, C, Java). Just type e.g.

make cvdemo

and it should do the right thing on Linux and OS X (provided you followed the instructions above). On Windows you can

- install MinGW, even if you are going to use MSVC to build your code, we need it just to get a standard

make to use the MinGW GCC compiler, run

make cvdemo

in the MinGW shell. To use MSVC first add the line

call "c:\Program Files\Microsoft Visual Studio 10.0\VC\vcvarsall.bat"

at the top of the file c:\MinGW\msys\1.0\msys.bat, then (re-)start the MinGW shell, and use the command line

export CXX=cl CC=cl

make cvdemo

These options both assume you have configured your PATH and JCV_DIR environment variables appropriately for the compiler you want to use as described above. If you installed both OpenCV and JavaCV into the recommended directories, you can quickly do this by using one of the provided scripts setup-mingw.sh or setup-msvc.sh.

source setup-mingw.sh

or

source setup-msvc.sh

The latter script also sets CXX=CC=cl as a convenience.

Except for calibration.cpp, these are all derived from a common base class called CvBase which is provided in cvbase.hpp and cvbase.cpp.

cvdemo.cpp is a very simple executable demonstration program that shows the basic functionality of CvBase. You can use it to view frames from a camera or video file and to save snapshots to .png image files. Run it with the command line argument “-h” to get help on the other possible command line arguments. Hit h with input focus over the video window to get help on the available key commands in the GUI.

cvrecord.cpp is another demonstration program built on CvBase. It has most of the functionality as cvdemo, but also saves all the captured frames to an output video file.

cvundistort.cpp is yet another demonstration program built on CvBase. It has much the same functionality as cvdemo, but also allows the captured frames to be undistorted if a camera calibration file is available in the YAML format output from calibration. Note that at least two command line parameters are always required to run cvundistort: the first gives the OpenCV index of the camera to use (–1 to use the first available camera), and the second gives the name of (or path to) the calibration file.

calibration.cpp is the camera calibration program that comes with OpenCV, to which we’ve added a few extra features, including control of the camera capture resolution. Run it with no command line arguments to get help.

cvbase is a C module that is a translation of the above CvBase C++ base class. It is provided in cvbase.h and cvbase.c. The C version of cvdemo gives an example of its use.

cvdemo.c is a version of the C++ cvdemo written in C, using the cvbase module.

CvBase.java is a version of the C++ CvBase written in Java.

CvDemo.java is a version of the C++ cvdemo written in Java.

If you cannot get a camera to reliably capture images into OpenCV, even after working with the course staff, you may use this example data in substitution for camera input. Several still images and a video sequence are provided. All data was acquired in  resolution with a Logitech Webcam Pro 9000.

resolution with a Logitech Webcam Pro 9000.

- On Mac OS X video writing (as we do in the demo program

cvrecord) seems very buggy, as does setting capture resolution with some cameras. - Sometimes requesting a 640x480 image from a camera with a higher native resolution will incorrectly show a cropped instead of scaled image. This may only affect Mac OS X. If the image looks cropped (typically you can only see the upper left 640x480 corner of the larger image) then restart the program.

We will consider all three problems to be “code”, so you may work with a partner. In fact, we encourage it. Follow the instructions on the assignments page to submit your work.

Out of 100 total possible points, 30 will be allocated to problem 1, 40 to problem 2, and 30 to problem 3.

(or, if you worked with the course staff and were not able to get a camera running, you must be able to capture frames from the provided video files) and display them on screen. If you develop your own test program for this step, please include it with your hand-in.

(or, if you worked with the course staff and were not able to get a camera running, you must be able to capture frames from the provided video files) and display them on screen. If you develop your own test program for this step, please include it with your hand-in. of the principal point (the actual intersection of the optical axis of the lens system with the pixel array), the horizontal and vertical focal lengths

of the principal point (the actual intersection of the optical axis of the lens system with the pixel array), the horizontal and vertical focal lengths  , and the distortion parameters

, and the distortion parameters  .

. are the camera intrinsics and are included in the YAML file as the

are the camera intrinsics and are included in the YAML file as the  matrix

matrix

and

and  to be approximately equal. If one is within about 10% of the other, it’s safe to assume they are actually the same for your camera, and you may get better results by re-running

to be approximately equal. If one is within about 10% of the other, it’s safe to assume they are actually the same for your camera, and you may get better results by re-running  are the radial and tangential distortion model coefficients and are included as the

are the radial and tangential distortion model coefficients and are included as the  , and the distortion parameters

, and the distortion parameters  . Please give each value in scientific notation with four digits of precision after the decimal point.

. Please give each value in scientific notation with four digits of precision after the decimal point. ) camera intrinsics are all measured in pixels. Given the horizontal and vertical dimensions of your camera images, what value of the point

) camera intrinsics are all measured in pixels. Given the horizontal and vertical dimensions of your camera images, what value of the point  would you expect for an ideally constructed camera? By what percent do your measured values for these two quantities differ from the expected values? Use the formula

would you expect for an ideally constructed camera? By what percent do your measured values for these two quantities differ from the expected values? Use the formula  . Put the answer in your README.

. Put the answer in your README. resp.

resp.  ), calculate the horizontal and vertical FoV of your camera in degrees, as a function

), calculate the horizontal and vertical FoV of your camera in degrees, as a function  ,

,  ,

,  , and

, and  , where the latter two are the horizontal and vertical resolution of the camera in pixels (for these calculations assume that

, where the latter two are the horizontal and vertical resolution of the camera in pixels (for these calculations assume that  and

and  have the values you would expect if the camera was ideally constructed). You will need to use some basic trigonometry to calculate the FoVs from the pixel measurements. Reasonable values for horizontal FoV for most common cameras should come out somewhere in the range of

have the values you would expect if the camera was ideally constructed). You will need to use some basic trigonometry to calculate the FoVs from the pixel measurements. Reasonable values for horizontal FoV for most common cameras should come out somewhere in the range of  —

— ; vertical FoV is typically somewhat smaller because there are typically fewer vertical pixels than horizontal, and pixels are typically square.

; vertical FoV is typically somewhat smaller because there are typically fewer vertical pixels than horizontal, and pixels are typically square. of about 2m (or 6ft). Using

of about 2m (or 6ft). Using  from the camera to the wall. Then turn the camera

from the camera to the wall. Then turn the camera  and repeat the experiment, using the same

and repeat the experiment, using the same  , but now record the distance

, but now record the distance  to the wall. Use

to the wall. Use  ,

,  , and

, and  to calculate the actual horizontal and vertical FoV of the camera, again invoking the necessary trigonometry. Call these the “expected” values, and calculate the percent error relative to the values “observed” from the camera calibration above.

to calculate the actual horizontal and vertical FoV of the camera, again invoking the necessary trigonometry. Call these the “expected” values, and calculate the percent error relative to the values “observed” from the camera calibration above. and a speed of at least 10 frames per second. (In

and a speed of at least 10 frames per second. (In