CS4610: LAB 4

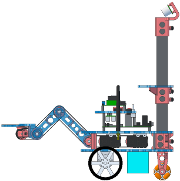

In the first part of this lab you’ll assemble the mast and camera on your robot. We are using Logitech Webcam Pro 9000 cameras, which conform to the UVC standard for USB video.

In the second part of the lab you’ll write high level code to capture images and process them to find an object with a known color, using OpenCV via the JavaCV bindings.

In the third part you will use image-based visual servoing to drive the robot to the detected object location and grasp it.

- Mast

- Get a mast, camera (find the one with your group number), camera bracket, and 4 2–56x1/4" screws. Also get one color ball “object”.

- The camera bracket should already have two black thumbscrews. Make sure the tips of these are not protruding (back them off if needed). Then attach the camera to the bracket withthe 2–56 screws as demonstrated. Be gentle; the camera mount is fragile.

- Feed the camera USB cable down through the mast until you see it under the round hole in the side of the mast. Use your needle nose pliers to grab it and work it through the hole (see demonstration, it’s tricky).

- Insert the mast into the mast bracket above the rear wheel. Make sure the round hole ends up on the bottom at robot right.

- Fit the camera bracket over the top of the mast.

- Attach the camera to a USB port on the right side of the Panda.

- Stow the extra usb cable inside the mast.

- Aim the camera down and gently tighten the thumbscrews as directed.

- Fan

- Get a 12V fan assembly.

- Snap the fan assembly onto the left rear round mezzanine standoff above the Panda (see demonstration). Gently push any wires out of the way. Make sure the fan ends up facing down and is aimed directly over the ARM chip.

- Connect the fan to the 12V power takeoff on the left side of the Org.

We have prepared an archive file of sourcecode that you’ll use in this lab. Follow similar instructions as for lab 0 to download and unpack it (on the panda). Make sure that the unpacked lab4 directory is a sibling to your existing lab? directories that were unpacked from the prior lab tarballs.

If you are running the panda headless, use the following command to download a copy of the lab tarball:

> wget http://www.ccs.neu.edu/course/cs4610/LAB4/lab4.tar.gz

We ask you not to modify the files you unpack from the lab tarball. You will write code that refers to these files and that links to their generated libraries. Please do not work directly in the provided files, in part because we will likely be posting updates to the lab tarball.

- In

lab4/src/org/libpololu-avr run make to build the Pololu AVR library. In lab4/src/org/monitor run

> make

> make program

to build the OHMM monitor and flash the new monitor code to the org.In lab4/src/host/ohmm run

> make

> make jar

to build OHMM.jar. (In the event that we distribute an updated lab tarball, you will need to re-make in org/libpololu-avr, org/monitor, and host/ohmm, re-program in org/monitor, and re-jar in host/ohmm.)Runing

> make project-javadoc

in lab4/src/host/ohmm will generate documentation for the OHMM Java library in lab4/src/host/ohmm/javadoc-OHMM (the top level file is index.html); or you can view it online here.Install OpenCV dependencies:

> sudo apt-get install libgtk2.0-dev libv4l-dev libjpeg62-dev \

libtiff4-dev libjasper-dev libopenexr-dev cmake python-dev \

python-numpy libeigen2-dev yasm libfaac-dev libopencore-amrnb-dev \

libopencore-amrwb-dev libtheora-dev libvorbis-dev libxvidcore-dev

Make sure all your users are in the video group:

# repeat for each user

> groups

# check that "video" is in the list, if not, continue

> sudo usermod -a -G video `whoami`

# now log out and back in, then verify when you run

> groups

# that "video" is in the list

In lab4/src/host/cvutils run make to build C++ OpenCV utilities.

You may wish to review the instructions in lab 2 on how to run OHMMShell and how to compile your own Java code against OHMM.jar.

Follow similar instructions as for lab 2 to make a checkout of your group svn repository on your panda as a sibling directory to the lab4 directory you unpacked from the lab tarball.

Change directory so that you are in the base directory of your svn repository checkout (i.e. you are in the g? root directory of the svn checkout), then run the commands

> mkdir lab4

> d=../lab4/src/host/ohmm

> cp $d/makefile $d/run-class ./lab4/

> cp $d/makefile-lab ./lab4/makefile.project

> sed -e "s/package ohmm/package lab4/" < $d/OHMMShellDemo.java > ./lab4/OHMMShellDemo.java

> sed -e "s/package ohmm/package lab4/" < $d/CvDemo.java > ./lab4/CvDemo.java

> svn add lab4

> svn commit -m "added lab4 skeleton"

This sets up the directory g?/lab4 in the SVN repository in which you will write all code for this lab. The makefile should not need to be modified: it is pre-configured to find the OHMM Interface library in ../../lab4/src/host/ohmm. It also will automatically compile all .java files you create in the same directory.

Change to your g?/lab4 directory and run

> make

> ./run-class CvDemo 0 160 120

This should display a small window with a 160 (width) by 120 (height) live feed from the camera. You will need to do this with an SSH connection to the panda with X forwarding (ssh -X -C). Alternately, you could set up the panda to run with a monitor, but there are not enough USB ports on the panda to simultaneously connect a keyboard, mouse, the Org, and the camera. One option is to use another remote machine for the keyboard and mouse by setting up x2x.

Write a program that runs on the panda and processes incoming frames for the camera. We recommend you start at a fairly low resolution, say 320x240, and aim for about 10 frames per second. We have provided a Java base class ohmm.CvBase that we strongly recommend you consider using.

You must have a way to display the incoming frames on a remote machine for debugging purposes. This debug view does not have to have the same resolution and frame rate as your actual processing. As the resolution and frame rate are increased the required bandwidth to transmit the images will also increase. We have found that even 320x240 is fairly slow using uncompressed remote X display. You may wish to use a smaller debug image (e.g. 160x120) and/or reduce the framerate.

Because even sending relatively small images uncompressed (or even with the default lossless compression you get with ssh -C) takes significant network bandwidth, we have provided an alternative: CvBase can be configured to run an internal HTTP server that makes the images available with JPEG compression. Instead of bringing up a window, to see the debug images you connect to the server from a remote client browser (our implementation also sends keyboard and mouse events back to the server). This uses much less network bandwidth. Please make sure you can use this server and plan to use it for your demo on the due date for the lab. This will avoid network meltdown as all groups try to simultaneously run their code.

Adapt your program so that it can respond to mouse clicks and keypresses in the debug window. Whenever the mouse is clicked have it print out on the panda the image coordinates of the mouse pointer at the time of the click. Similarly, whenever the ‘r’ key is pressed in the debug window, print out a message on the panda. (By “on the panda” we mean e.g. print to System.out in the control program running on the panda, so you can see the output in the terminal from which you ran the control program.)

Develop a blob tracker that begins when the user clicks on a blob. Use that click to set the target color (you may want to average the pixel colors near the mouse click). Track the blob as it moves around in the image, either by moving the object or the camera. You must show a small “+” drawn over the live debugging image that indicates the center of the tracked blob. You may assume that if there is more than one blob matching the target color that you are to track the largest one.

Use your object detection program to develop a closed-loop controller that rotates the robot in place to make it face the clicked object. This pre-supposes that the object was visible somewhere in the image to start with; for this lab, you will just place the provided object somewhere in the camera’s field of view. We would ideally like to see your code work no matter where the object is in relation to the robot, as long as it is initially visible.

Begin with a calibration step where you place the object in the robot’s gripper as it should appear after a successful grip (i.e. with the arm set so the gripper is near the ground and extended forward). Click on the image of the object in this calibration pose, both to set the target color and to set the target image location for the blob.

Then develop a controller (e.g. bang-bang or proportional) that uses the horizontal difference (in image pixels) between the current blob location and the target location to drive in-place rotation. After the calibration click, do not engage the visual servo controller (i.e. do not start moving the robot) until the user hits the ‘r’ key (run). This will allow you to take the object out of the robot’s gripper and move it to another place (but still in the camera field of view). When the user hits ‘r’ to begin motion, you should first stow the arm (e.g. in home pose) to keep it out of the way until you are ready to grip the object again.

You may choose from any of the available in-place rotation methods, such as OHMM.driveSetVW(), OHMM.driveOrientationServo(), etc. You may find that it is easiest to develop a controller in the usual way if you simply command the rotate-in-place speed  and implement the rest of the controller in Java. Hint: don’t try to move too quickly. Keep the arm stowed so that it is mostly out of the way in the images.

and implement the rest of the controller in Java. Hint: don’t try to move too quickly. Keep the arm stowed so that it is mostly out of the way in the images.

Develop a similar controller that drives the robot forward or backward radially toward/away from the object. Have this controller automatically engage once the turn-in-place controller is within a tolerance of the goal orientation. The idea is to drive the robot to a spot where the arm can successfully reach out and grab the object.

Detect when the object is close enough to the goal location in the image to stop robot motion and engage the arm. Open the gripper, use your arm IK to lower and then extend it, then close the gripper.

Return “home” with the object, i.e. return the robot to the global  pose where it was when you hit ‘r’. For example, if your program initializes

pose where it was when you hit ‘r’. For example, if your program initializes  then this is equivalent to returning “home”. Also make sure to finish motion with the arm in its kinematic home pose.

then this is equivalent to returning “home”. Also make sure to finish motion with the arm in its kinematic home pose.

Optional extra credit:

- Detect when the object is not visible in the camera. Either stop and display an error or engage a search behavior to re-acquire the object.

- Verify that the grip succeeded, for example, return the arm to home pose after attempting to pick up the object, and verify that the object appears in the image to move as it should if it is actually held in the gripper.

You will be asked to demonstrate your code for the course staff in lab on the due date for this assignment. We will try out code; 30% of your grade for the lab will be based on the observed behavior. Mainly want to see that your code works and is as bug-free as possible.

The remaining 70% of your grade will be based on your code, which you will hand in following the instructions on the assignments page by 11:59pm on the due date for this assignment. We will consider the code completeness, lack of bugs, architecture and organization, documentation, syntactic style, and efficiency, in that order of priority. You must also clearly document, both in your README and in code comments, the contributions of each group member. We want to see roughly equal contributions from each member. If so, the same grade will be assigned to all group members. If not, we will adjust the grades accordingly.

![]() and implement the rest of the controller in Java. Hint: don’t try to move too quickly. Keep the arm stowed so that it is mostly out of the way in the images.

and implement the rest of the controller in Java. Hint: don’t try to move too quickly. Keep the arm stowed so that it is mostly out of the way in the images.![]() pose where it was when you hit ‘r’. For example, if your program initializes

pose where it was when you hit ‘r’. For example, if your program initializes ![]() then this is equivalent to returning “home”. Also make sure to finish motion with the arm in its kinematic home pose.

then this is equivalent to returning “home”. Also make sure to finish motion with the arm in its kinematic home pose.