Control

- Many engineered systems have an input which affects the value of some output. If we are interested in regulating values of the output, as we frequently are, we become interested in algorithms to adjust the inputs accordingly.

- This will clearly be facilitated by making feedback about the current state of the output available to the control algorithm, though it is also possible to try to “blindly” adjust the input.

- Regulation algorithms and their implementations are called control systems.

- The difficulty of the control problem depends on a number of factors including the physical complexity of the system, its time dynamics and those of the desired output trajectory, the resolution and latency of the available feedback sensors, etc.

- Here we will study a collection of common and relatively straightforward control techniques. The modern study of control goes way beyond these.

Feed-Forward Control

- Consider the very relevant control problem of regulating the rotational speed of a DC motor with PWM input.

- We know that the physical dynamics of the system tend to make the output shaft spin according to the polarity of the applied current, which sets the direction of the motor torque, and the pulse width, which relates to the magnitude of the motor torque.

- If we are not sure to what the shaft is connected, then without feedback we have a very hard problem.

- Recall that the shaft rotation depends both on the motor torque, i.e. the torque the motor is applying to the shaft, and the applied torque, i.e. the torque the external world is applying to the shaft.

- It could be the case that the applied torque greatly exceeds the motor torque.

- So let’s consider a much more constrained problem where the motor shaft is not connected to anything.

- Note, there will still be some applied torque in the form of friction internal to the motor.

- The back-EMF effect also plays a very significant role. The faster the motor spins, the grater the back-EMF:

. But the motor torque is proportional to current:

. But the motor torque is proportional to current:

and current is proportional to the difference of applied voltage and the back-EMF:

and current is proportional to the difference of applied voltage and the back-EMF:

. So as the motor accelerates, the increased speed causes a greater back-EMF, which reduces the current through the motor, reducing the motor torque.

. So as the motor accelerates, the increased speed causes a greater back-EMF, which reduces the current through the motor, reducing the motor torque.

- For any fixed PWM setting the motor speed will not go to infinity, but will reach a steady state where the back-EMF balances the effective applied voltage except for a small discrepancy. That voltage difference corresponds to the amount of steady state current (via Ohmm’s law) required to produce a torque that balances friction.

- Accounting engergy is also enlightening. At a fixed PWM input, a fixed amount of energy per time (power) will flow into the motor. The output power is the product of torque times speed. Once acceleration stops, the only torque is that due to friction, effectively a constant. The input power is transformed both into waste heat and output power. The waste heat is also effectively constant at steady state. So the output power

must be constant, which makes

must be constant, which makes

the only variable in this equation

the only variable in this equation

. Thus

. Thus

is also determined.

is also determined.

- Because this is a relatively simple and predictable system, we can try to control it without feedback. This is called feed-forward control.

- One option would be to try to invert a model of the motor dynamics, so that for a given desired velocity trajectory we could find the required trajectory of the input PWM signal. This is not necessarily easy, as the motor does have some internal dynamics, which, for example, can cause time delays between changes in the input vs changes in the output.

- A simpler strategy is to first do an experiment where we do have feedback. We can run the PWM input through its entire range and measure the resulting speed at as many settings as we like.

- If we do this slow enough so that the speed reaches steady state after each change in input, the result is a set of samples of a function that maps PWM inputs to observed speed outputs.

- We can interpolate between these samples (for example using linear interpolation), and then numerically invert the function.

- The result can be boiled down to a look up table (LUT) which maps desired output velocities to corresponding PWM control inputs.

Bang-Bang (On/Off) Control

- The above strategy is limited to cases where we can sufficiently predict the system dynamics a-priori, i.e. to cases of low uncertianty.

- This includes an assumption that noise is not significant.

- In most interesting systems, these are not good assumptions. But we can make progress if we add a sensor to get feedback on the actual motor speed.

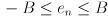

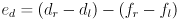

- We can then run a periodic feedback control algorithm at some frequency. At each update the feedback

is measured, compared to the desired value

is measured, compared to the desired value

, and then a control action is taken to try to minimize the error

, and then a control action is taken to try to minimize the error

(note: we need to be consistent with signs here).

(note: we need to be consistent with signs here).

- What frequency? This depends both on properties of your system and the available speed of computation. A reasonable rule of thumb is to aim for control updates at least 10 times as fast as the fastest expected change in the system.

- In practice, frequencies of 50-200 Hz are common for fairly slow and coarse systems and up to 20kHz or more for fast-moving and high-precision systems. (Slow changing systems, such as habitat temperature control, can run at even slower rates.)

- One complication when using position sensors—like pots and encoders—to measure speed is that we need the shaft to move somewhat between successive measurements so that we can take their difference, which will be the speed feedback. The spatial resolution of pots is limited by the resolution of the A/D converter (i.e. its number of output bits). The resolution of encoders is limited by the number of slots on the codewheel. It is also possible to take the difference with respect to the value observed more than one update “ago”, though this effectively averages the speed measurement over a longer time period. This is the strategy we take for the drive motors on OHMM.

- The rest of this lecture will focus on the control action.

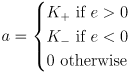

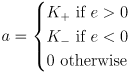

- One of the simplest things we can do is run the motor at a fixed PWM in one direction or the other when

:

:

where

is the calculated PWM action and

is the calculated PWM action and

are constants that we can tune.

are constants that we can tune.

- This is called bang-bang (or on/off) control.

- One problem is that this control will tend to oscillate around the set point.

- We can reduce this effect, at the cost of adding some slop, either by introducing a hysteresis band or a dead band.

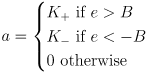

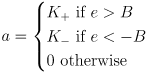

- A dead band turns the control off in some region around the setpoint

where

is another constant we can tune. This may not be great for controlling speed, because the speed will tend to decrease (due to friction) once the control is turned off. Deadbands can be more useful in systems that tend not to diverge immediately when the control action is turned off.

is another constant we can tune. This may not be great for controlling speed, because the speed will tend to decrease (due to friction) once the control is turned off. Deadbands can be more useful in systems that tend not to diverge immediately when the control action is turned off.

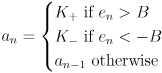

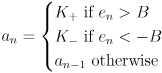

- For a system like the motor under speed control we can adjust this to become a hysteresis band:

where

is the new calculated action at control update

is the new calculated action at control update

and

and

was its value after the last control update.

was its value after the last control update.

is the error at control update

is the error at control update

. This is a valid recursive control action but now

. This is a valid recursive control action but now

is not a function of the present value of

is not a function of the present value of

alone: the value of

alone: the value of

when

when

depends on its history (TBD diagram).

depends on its history (TBD diagram).

- Hysteresis is commonly used in habitat thermostats, where

and

and

(the furnace is either on or off).

(the furnace is either on or off).

- These techniques can reduce the frequency of oscillation but will not eliminiate it. They also introduce slop around the setpoint.

Proportional (P) Control

- The name “bang-bang” control highlights another possibly undesirable property of the above technique:

will make abrupt changes, which can lead to jerky behavior.

will make abrupt changes, which can lead to jerky behavior.

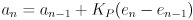

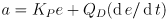

- Instead of setting

to one constant or another, we can make its value proportional to the error:

to one constant or another, we can make its value proportional to the error:

Where

is a proportional gain constant that we can tune.

is a proportional gain constant that we can tune.

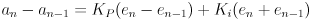

- This can also be formulated recursively by subtracting

from

from

:

:

though this is not important for pure proportional control, it will help our development below.

- The control action will decrease towards zero as the error decreases, and will increase for larger errors.

- This can work well, but also has a few problems.

- Large values of

often lead to oscillation, which can make the system go unstable; the oscillations can get larger and larger until something breaks!

often lead to oscillation, which can make the system go unstable; the oscillations can get larger and larger until something breaks!

- Again, some systems require nonzero action just to maintain steady state. This includes the motor under velocity control. Observe that if we just used a proportional controller, then the control action would be zero whenever the setpoint is reached.

- As long as

is not large enough to cause oscillation, the system will reach a steady state but may have a nonzero error.

is not large enough to cause oscillation, the system will reach a steady state but may have a nonzero error.

- Can we predict in theory what value of

is optimal? Can we predict what the threshold is when oscillation begins (or is possible)? Can we prove that a given choice of

is optimal? Can we predict what the threshold is when oscillation begins (or is possible)? Can we prove that a given choice of

will never lead to instability? These kinds of questions are studied in control theory. In many cases the answers are now known for certain types of systems, and strategies are at least known for analyzing many systems of practical interest.

will never lead to instability? These kinds of questions are studied in control theory. In many cases the answers are now known for certain types of systems, and strategies are at least known for analyzing many systems of practical interest.

Proportional-Integral (PI) Control

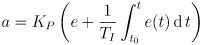

- One idea to address the residual error in proportional control of a system which requires nonzero control action to maintain steady state is to add up or integrate the residual error over time, and then also incorporate that value in the control law:

Here, the integration is done over a period of time from

in the past until now (

in the past until now (

). That way, if there was ever an error during that time, we can have a nonzero control action. (One way to understand why we divide the integral by a time constant

). That way, if there was ever an error during that time, we can have a nonzero control action. (One way to understand why we divide the integral by a time constant

is to reason about units.)

is to reason about units.)

- In practice, this is usually rearranged at least into this form:

Where

is a tunable constant, so that the effect of the integral term is independently controllable.

is a tunable constant, so that the effect of the integral term is independently controllable.

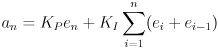

- Recalling that the actual implementation of the controller operates in discrete, not continuous time, as we have framed the above integral, one way to implement this is by maintaining a sum of the error values as they are calculated at each update.

- If

effectively fixed at

effectively fixed at

, i.e. the time when control began, we just need to keep one variable to store the running total. This is a common setup.

, i.e. the time when control began, we just need to keep one variable to store the running total. This is a common setup.

- Another possibility is to have

be some amount of time in the past relative to

be some amount of time in the past relative to

, for example,

, for example,

. For that you generally need to store an array of prior error values. At each control update you discard the oldest value in that array, insert the new value, and re-calculate the sum.

. For that you generally need to store an array of prior error values. At each control update you discard the oldest value in that array, insert the new value, and re-calculate the sum.

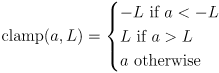

- In either case, the sum is a discrete estimate of the integral. It is usually necessary to clamp the maximum magnitude of the sum to a limit so that it does not grow without bound, which can make the integral term have too large of an effect (and, if using integer math, it could possibly overflow).

- There is an elegant recursive form for the discrete-time PI control law, in the case

.

.

- First replace the integral with a discrete sum based on the trapezoid approximation:

where the latter form includes the

in the constant.

in the constant.

- Now subtract

from

from

:

:

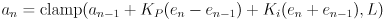

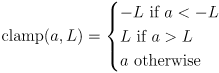

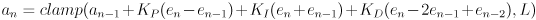

and clamp the control action to limit the cumulative effect of integration:

where

and

is a constant limit for the control action (another value that can be tuned).

is a constant limit for the control action (another value that can be tuned).

Proportional-Derivative (PD) Control

- The derivative of the error is also useful in control. In particular, when the control setpoint

is changed quickly, the error will also usually change quickly because the system state measured by the feedback

is changed quickly, the error will also usually change quickly because the system state measured by the feedback

will take some time to respond. This can make the derivative of

will take some time to respond. This can make the derivative of

larger right after changes in

larger right after changes in

, which is also when we may want a larger control action.

, which is also when we may want a larger control action.

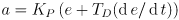

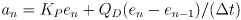

- Starting with a P controller, we can add another control term that is proportional to

:

:

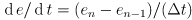

- In a discrete time implementation, we can approximate

as the change in

as the change in

from one control update to the next divided by the control update period

from one control update to the next divided by the control update period

:

:

where the latter form includes the

in the constant.

in the constant.

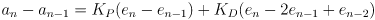

- We can also formulate this recursively by subtracting

from

from

:

:

Proportional-Integral-Derivative (PID) Control

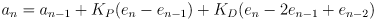

- We can get the benefits of all three terms together in Proportional-Integral-Derivative (PID) control. This form is a workhorse of basic control. Note that any of the terms can be omitted just by setting the corresponding gain to zero:

- There are now three control gains plus the limit

that need to be tuned. We may also consider tuning the update period

that need to be tuned. We may also consider tuning the update period

. In some cases (often) it can be tricky to find a good part of the “parameter space” in which to set these values. There are theoretically optimal ways to calculate them in many cases, though those usually depend on knowing a lot about the system you’re controlling.

. In some cases (often) it can be tricky to find a good part of the “parameter space” in which to set these values. There are theoretically optimal ways to calculate them in many cases, though those usually depend on knowing a lot about the system you’re controlling.

- In practice, for non-life-threatening and simple control problems, many people use heruistics and a guess-and-check approach to setting the parameters. The textbook ER has a reasonable writeup of such a heruistic procedure.

Differential Drive Velocity Control

- We can implement two independent velocity controllers for the two wheels in a diff drive robot, to regulate them to commanded rotational velocities

.

.

- In theory, this would be correct, but in practice there are often issues due to the fact that the controllers are not perfect, and the wheel motions will not be exactly symmetric.

- For example, commanding equal velocities on each side should make the robot go straight (recall that we had defined the positive signs each velocity so that the robot goes forward when

is positive). But in practice, it will wiggle unless we take further action.

is positive). But in practice, it will wiggle unless we take further action.

- A common approach is to carefully arrange a pure I controller that acts cross-wise between the two wheels. It integrates the quantity

which is proportional to the difference between the disired rate of ccw rotation and its actual rate.

- Clamping is applied and the result is multipled by a tunable gain constant.

- Finally the result is added to the right motor control action and subtracted from the left motor control action. (If

is positive then the robot is not turning CCW fast enough, so speed up the right motor and slow down the left.)

is positive then the robot is not turning CCW fast enough, so speed up the right motor and slow down the left.)

- This will work both in the case that the robot is commanded to drive straight (

) and when it has been commanded to turn.

) and when it has been commanded to turn.

- We have included this feature in the

mot module of the OHMM monitor.

Trajectory Following and Position Control

- Controllers can be layered on top of each other.

- A reasonable way to implement position control for a DC motor with an encoder is to start with a PID velocity controller.

- Then on top of that, implement a PD position controller.

- The feedback of the position controller is taken directly from the encoder.

- The position controller outputs a velocity command, which is then sent as the input to the velocity controller.

- No integral term is needed (or desired) because this is a case where the control action should be zero at steady state: when the robot has reached the goal position its velocity should be zero.

- To drive a significant distance, starting and stopping at rest, we may want to first ramp the speed up to some maximum, hold that for a period of time, and then ramp down.

- This is generally called trapezoid velocity profiling. Note that it is the plot of velocity vs time that looks like a trapezoid. The plot of position vs time will be quadratically increasing at first, then linearly increasing, then again quadratically increasing, but with opposite curvature from the beginning phase.

- A simple way to do this, which can work in some cases, is to command the velocity profile open-loop. Then turn on a bang-bang controller (with some deadband) when the goal is approached.

- The velocity profile can even omit the acceleration/deceleration phases as long as the maximum velocity is kept reasonably low.

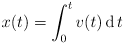

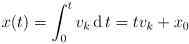

- Another way is to use PD position control on top of velocity control. First calculate the ideal velocity profile, then integrate it it to a position profile:

- This is paricularly easy for the case of constant velocity

:

:

or

or

(Here

is not necessarily the control update period, but any amount of time that has passed while the velocity was held constant.)

is not necessarily the control update period, but any amount of time that has passed while the velocity was held constant.)

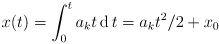

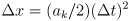

- Linear acceleration/deceleration is also pretty easy:

:

:

or

or

- A trapezoid profile is just the piecewise sum of a linear acceleration phase, a constant velocity phase, and then a linear deceleration phase.

- These calculations are also helpful in determining the time breakpoints between the three phases of the trapezoid profile given the total desired travel distance, the maximum speed

, and an acceleration rate

, and an acceleration rate

.

.

- Finally, apply the calculated position profile as the command input to the PD position controller. The robot should follow the trapezoid velocity profile and also have a controlled position at all times, including once the goal is reached.

- For a diff drive robot, straight drive segments and turn-in-place segments can be implemented directly with the above ideas, because in those cases one of

is held zero. It is also possible to do position control and profiling if both

is held zero. It is also possible to do position control and profiling if both

are nonzero, but the command profiles need to be calculated in such a way that they remain synchronized so the robot follows the desired trajectory (and ends up in the desired pose).

are nonzero, but the command profiles need to be calculated in such a way that they remain synchronized so the robot follows the desired trajectory (and ends up in the desired pose).

. But the motor torque is proportional to current:

. But the motor torque is proportional to current:

and current is proportional to the difference of applied voltage and the back-EMF:

and current is proportional to the difference of applied voltage and the back-EMF:

. So as the motor accelerates, the increased speed causes a greater back-EMF, which reduces the current through the motor, reducing the motor torque.

. So as the motor accelerates, the increased speed causes a greater back-EMF, which reduces the current through the motor, reducing the motor torque. must be constant, which makes

must be constant, which makes

the only variable in this equation

the only variable in this equation

. Thus

. Thus

is also determined.

is also determined.