Return to basic course information.

Assigned: Thursday, 3 October 2013

Due: Email TAs with subject "CS6200 Project 1" by Monday, 21 October 2013, 6pm

// P is the set of all pages; |P| = N

// S is the set of sink nodes, i.e., pages that have no out links

// M(p) is the set of pages that link to page p

// L(q) is the number of out-links from page q

// d is the PageRank damping/teleportation factor; use d = 0.85 as is typical

foreach page p in P

PR(p) = 1/N /* initial value */

while PageRank has not converged do

sinkPR = 0

foreach page p in S /* calculate total sink PR */

sinkPR += PR(p)

foreach page p in P

newPR(p) = (1-d)/N /* teleportation */

newPR(p) += d*sinkPR/N /* spread remaining sink PR evenly */

foreach page q in M(p) /* pages pointing to p */

newPR(p) += d*PR(q)/L(q) /* add share of PageRank from in-links */

foreach page p

PR(p) = newPR(p)

return PR

In order to facilitate the computation of PageRank using the above pseudocode, one would ideally have access to an in-link respresentation of the web graph, i.e., for each page p, a list of the pages q that link to p.

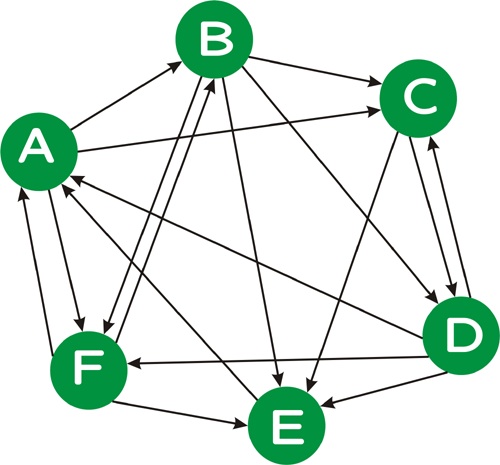

Consider the following directed graph:

We can represent this graph as follows:

A D E F B A F C A B D D B C E B C D F F A B Dwhere the first line indicates that page A is linked from pages D, E, and F, and so on. Note that, unlike this example, in a real web graph, not every page will have in-links, nor will every page have out-links.

To hand in: List the PageRank values you obtain for each of the six vertices after 1, 10, and 100 iterations of the PageRank algorithm.

Run your iterative version of PageRank algorithm until your PageRank values "converge". To test for convergence, calculate the perplexity of the PageRank distribution, where perplexity is simply 2 raised to the (Shannon) entropy of the PageRank distribution, i.e., 2H(PR). Perplexity is a measure of how "skewed" a distribution is --- the more "skewed" (i.e., less uniform) a distribution is, the lower its preplexity. Informally, you can think of perplexity as measuring the number of elements that have a "reasonably large" probability weight; technically, the perplexity of a distribution with entropy h is the number of elements n such that a uniform distribution over n elements would also have entropy h. (Hence, both distributions would be equally "unpredictable".)

Run your iterative PageRank algorthm, outputting the perplexity of your PageRank distibution until the perplexity value no longer changes in the units position for at least four iterations. (The units position is the position just to the left of the decimal point.)

For debugging purposes, here are the first five perplexity values that you should obtain (roughly, up to numerical instability):

To hand in: List the perplexity values you obtain in each round until convergence as described above.

To hand in: List the document IDs of the top 50 pages as sorted by PageRank, together with their PageRank values. Also, list the document IDs of the top 50 pages by in-link count, together with their in-link counts.

will bring up document WT04-B22-268, which is an article on the Comprehensive Test Ban Treaty.

To hand in: Speculate why these documents have high PageRank values, i.e., why is it that these particular pages are linked to by (possibly) many other pages with (possibly) high PageRank values. Are all of these documents ones that users would likely want to see in response to an appropriate query? Which one are and which ones are not? For those that are not "interesting" documents, why might they have high PageRank values? How do the pages with high PageRank compare to the pages with many in-links? In short, give an analysis of the PageRank results you obtain.